Intro

While working on my website, I got sick of the default link previews. Google is pretty good about running JavaScript to figure out what's on the page, but many platforms such as Facebook, Twitter, and Discord won't. And, it's not very helpful to users:

When using something like Create React App, this becomes hard to change. You're likely making a fetch or axios request to api.your-site.com or your-site.com/api to fetch content, so your index.html is just static:

<head>

<title>My Website</title>

<meta

name="description"

content="A default description that is the same for every single page that a user links."

/>

</head>

Using Server Side Rendering (SSR)

Yes, SSR could solve this, and there are plenty of benefits to using something like Next.js. But for an existing project, it can be a big undertaking to convert to Next.js, especially when working with dozens of pages, each with their own unique fetches.

Using new technology for a large project also has some inherent risks. For example, one of my pages requires multiple requests to determine the title:

NA West Duos Queue ($50 in prizes)

("NA West" and "Duos" is determined from the queue endpoint, however the $50 is determined from the ladders endpoint.)

Using Next.js, I would need to force users to wait for those internal requests to complete before I could even start responding to their request. Furthermore, if that specific API is slow or down, we have some edge cases we need to handle (How long do we wait for that service to respond? What do we show the user if it fails? If only one request fails, can we partially display the data from the other request?).

This is just one example of the problems I would encounter when transitioning to Next.js—and there are more that I wouldn't find out about until the implementation was complete.

Basic Solution With Node

Luckily, we don't need SSR to do this. While not optimal, this problem can quickly be solved with an express server:

const indexFile = fs.readFileSync('./index.html', 'utf-8');

app.get('*', (req, res) => {

getTitleAndDescription(req)

.then(({ title, desc }) => {

res.send(

indexFile

.replace(

'<title>My Website</title>',

`<title>${title}</title>`,

)

.replace(DEFAULT_DESCRIPTION, desc || DEFAULT_DESCRIPTION),

);

})

.catch((e) => {

res.send(indexFile);

console.error(e);

});

});

getTitleAndDescription(req) is a function that can do anything, including making requests to your API to figure out what to render. However, this has two negatives:

- Nginx is written in C. It's just always going to be more performant and use less resources per request than Node.

- We're increasing the load on our API by making additional requests every time a user loads the page. In addition, load time will be increased (depending on your caching situation).

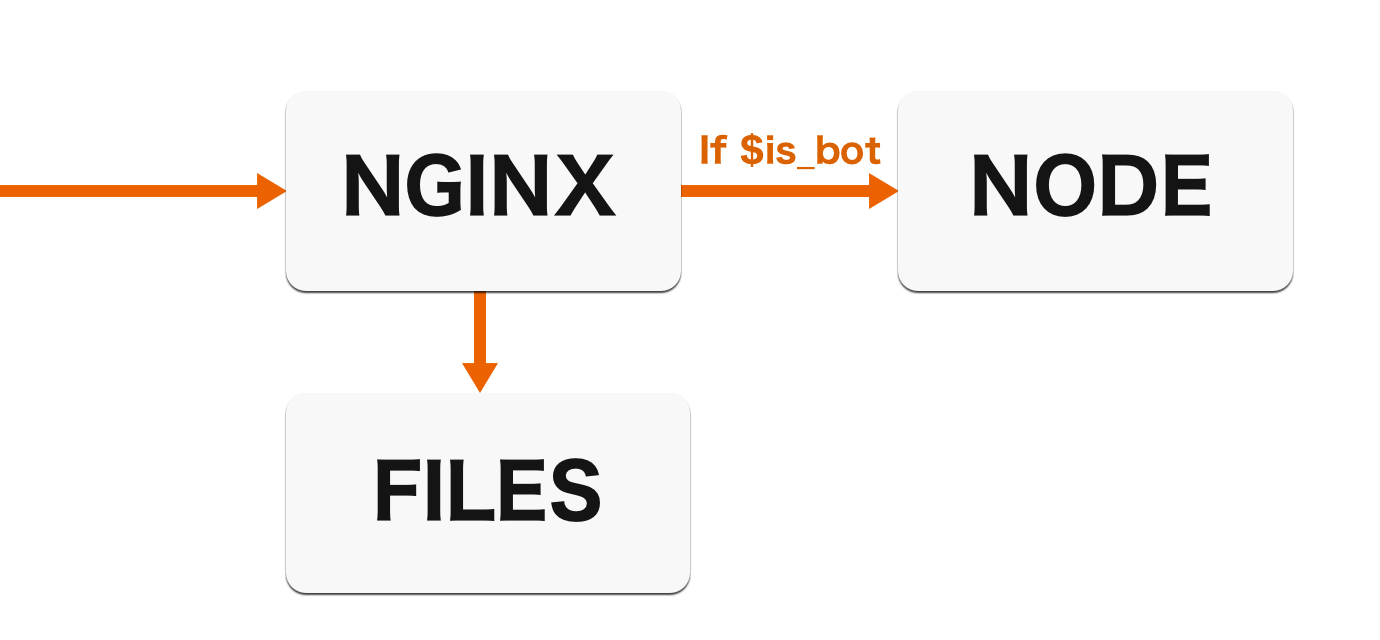

Combining With Nginx

I was using nginx at the time and wanted to continue doing so, so I ended up merging the solutions. This is how I was previously serving my index.html:

location / {

try_files $uri $uri/ /index.html;

# Add some headers here too...

}

And after my change:

map $http_user_agent $is_bot {

default 0;

~*facebook|crawl|slurp|spider|bot|tracker|click|parser 1;

}

# Later...

location / {

try_files $uri $uri/ /index.html;

# Add headers...

if ($is_bot) {

proxy_pass http://localhost:3012;

}

}

Here, if the user agent matches the regex (e.g. contains facebook, crawl, bot, etc), we'll instead forward the request to our node server. While a user could spoof their user agent, this isn't really a security issue since we're not serving any sensitive information. We don't need to do any of the work generating the description for the user, and we can always set the title later for them dynamically.

I was using Kubernetes and was able to define a single deployment with two images. However, you could do this with simple docker containers (or just barebones on a simple server).

Conclusion

That was my solution: Create a node server specifically designed for requests from bots. While this might not be the best solution for everyone, I wanted to share my thought process. I may still convert my application over to Next.js at some point in the future, but it wasn't the right time when I wanted to implement this feature, and there were less-risky alternatives available. Adding new technology to a large application can be a big risk, so it's important to consider your options thoroughly. Oh, and also, if people are ever linking your stuff, add link previews. They're awesome!